# v1.0

## Some Old Date

I decided to create a Rust Learning Journey, a github repo with exponentially difficult projects, where first stage is to learn rust, and then we push that beyond standard rust.

## 06.10

I started looking on simmilar project to check how to emulate RaspberryPi.

I found these project for further research:

https://www.rpi4os.com/

https://github.com/bztsrc/raspi3-tutorial/

These are bare-metal OS.

## 07.10

https://github.com/bztsrc/raspi3-tutorial/

I will stick with this repo, it's not focused on writing OS, but

Found what I was looking for:

`qemu-system-aarch64 -M raspi3b -kernel kernel8.img`

Qemu has the option to simulate raspberry pi 3B, the exact one I have.

There's no point to follow this tutorial, I need to create an enviroment as fast as possible, and create the minimal pipeline.

So, the kernel should be in ISO format, but what are the alternatives?

I need to get know about raspi architecture, boot and other.

## 08.10

(30 minut)

Ok, I managed to test the emulator.

It works... I guess.

Now I have to get more into the topic.

I found that there's a special format zImage, and that's used for qemu to run kernel.

"zImage is compressed version of Linux kernel image that's self-extracting"

I guess that reading rust for embeded will help me

Rust have a great documentations, and it has great books:

https://docs.rust-embedded.org/

What? There is a tutorial on embeded rust for Raspberry Pi!!

https://github.com/rust-embedded/rust-raspberrypi-OS-tutorials

## 09.10

Research to be done:

- Find Raspberry Pi 3B docs

- learn about boot process for raspberry

- create toolchain for rust

- learn about GDB for embedded programming.

## 10.10

Found docs for Raspberry.

## 11.10

All other models (beside rasp pi 4), use the `bootcode.bin` for boot.

BCM2837 - the processor

https://www.raspberrypi.com/documentation/computers/raspberry-pi.html#boot-sequence

So, we have a room, which checks for GPIO boot possibility.

The boot sequence checks for OTP (One Time Programable Block to determine which boot modes should be **enabled**)

The sequence follows:

- GPIO

- primary SD (GPIO 48-53)

- secondary SD

- NAND

- SPI

- USB

The first idea was to boot with secondary SD.. but actually it would be better to use GPIO ports for testing.

https://www.raspberrypi.com/documentation/computers/raspberry-pi.html#gpio-boot-mode

- [x] Need to check which version of rasp pi 3b i have there's BCM2837 and BCM2837B0

The model is: BCM2837RIFBG

Ok! That GPIO is never gonna ever happen!

It is used to select the boot device by GPIO, also it requires to set OTP.

The OTP once is programmed, it deliberatly blows a fuze! Inside a chip!

And since you cannot get into the chip, you cannot undo the settings!

Ok then, booting the thing.

First I should to determine what boot options are enabled.

But I will just test the USB boot mode.

Ok, I can check the config.

"The Raspberry Pi uses a configuration file instead of the [BIOS](https://en.wikipedia.org/wiki/BIOS)"

## 12.10

There are no bios, but you have a config files intead.

`vcgencmd get_config` - this returns current configuration

So, we need a boot partition:

The boot partition has: `config.txt`, `bootcode.bin`, `start.elf`

there's also `autoboot.txt` config

Some interesting opitons:

`kernel` - default is `kernel7.img` for my model.

`arm_64bit` - if `1` then starts in 64-bit mode

TF is `ramfs`?

https://forums.raspberrypi.com/viewtopic.php?t=326285

So, the `initramfs` is a tiny filesystem, that has the essentials, such like mounting partitions (because we have heck no way to mount modules)

https://wiki.archlinux.org/title/Arch_boot_process#initramfs

Right, it is the initial RAM file system. And it's purpose is to serve an initial root; so it can bootstrap to the point it will be replaced with proper root from

`ramfsfile`, `ramfsaddr`, `initramfs` - are configs for this.

`initramfs` - is a combination of `ramfsfile` and `ramfsaddr` (where in memory put that)

*What?*

>If `auto_initramfs` is set to `1`, look for an initramfs file using the same rules as the kernel selection.

`disable_splash`

`enable_uart`

`os_prefix` - directory prefixes must include trailing `/`

if expected kernel and `.dtb` files are not present, prefix is ignored

prefix can be bypassed if we use absolute path `/` in options like `kernel`

TF is overlays?

It's saved with `.dtbo` format

- [ ] Read: https://github.com/raspberrypi/firmware/blob/master/boot/overlays/README

So this is the idea:

I connect with USB Raspberry with PC.

I "fake" the usb mass storage on PC (so raspberry thinks it reads from pendrive)

I pin to power pin, so I can automate reboot for Raspberry.

I will check the other solutions:

The Rust Embedded uses a chainloader over the UART.

He uses USB to TTL serial.

Welp, that probably easier to manage.. I guess?

Now, rust.

Let's create a toolchain for that.

Based on:

https://docs.rust-embedded.org/book/intro/install/linux.html

I might need a special gdb for that.

What is `openocd`?

Oh.. that's Open-On-Chip-Debugger!

`arm-none-eabi-gdb` of fuck! It's a bare metal debugger!! It's a bare metal!

the E-ABI is used for a bare metal, (when we don't have kernel)

- [x] TODO: install gdb

I think this will the the best book:

https://docs.rust-embedded.org/embedonomicon/

And by that I should find the correct rustup target.

There are a lot of possible targets for me:

- armebv7r-none-eabihf

- armv7r-none-eabihf

`hf` stands for **hard float**

https://forums.raspberrypi.com/viewtopic.php?t=11177

Oh! the **hard float** means that we have a **hardware** for floats.

`hf` stands for using FPU.. *why?*

This is my target book:

https://docs.rust-embedded.org/embedonomicon/

- [x] Check if BCM2837 has FPU + find what target is for that

Have FPU,

- [ ] Create toggl/activity watch

`armebv7r-none-eabihf` - Bare ARMv7-R, Big Endian, hardfloat

`armebv7r-none-eabihf` - Bare ARMv7-R, hardfloat

Raspberry Pi is Little Endian.

Now the question is: does my cpu is ARMv7?

no... we have ARMv8

I don't see ARMv8 in platform support:

https://doc.rust-lang.org/nightly/rustc/platform-support.html

`thumbv8m.main-none-eabihf` - Bare ARMv8-M Mainline, hardfloat

read:

- [x] https://developer.arm.com/documentation/107656/0101/Introduction-to-Armv8-M-architecture/Baseline-and-Mainline

## 13.10

Quad-core ARM Cortex-A53.

The baseline is a minimal architecture, the mainline adds main extensions

https://www.reddit.com/r/rust/comments/me44gh/is_there_a_toolchain_for_armv8a_available_for_rust/

`aarch64-unknown-none` - there's also that.

https://forums.raspberrypi.com//viewtopic.php?t=188544

Ok, let's assume that I have choosen target platform..

what now?

Testing the rust. Making hello world for QEMU

I works! I guess!

Based on Embedonomicon, I builded the OS "Hello World" (the infinity loop) for 2 targets.

And the right one is: `aarch64-unknown-none`

>`qemu-system-aarch64 -M raspi3b -kernel ./aarch64-test`

## 14.10

Ok, it get's interesting.

Normally I wouldn't do that.. but for science!

I looked for dev container. And found that the thing actually exists, and that's for VS Code.

Now, I understand that I SHOULD start to contenerize my dev enviroments.

What I need:

- Rust for compilation + debugging

- QEMU

- some extra packages

- arch

I found `Incus`, it's a linuxcontainer project.

It uses LXC and QEMU, it allows for containers and vms.

Oh! It appears to be actually new thing. The version 0.1 just came.

Ok, the IDE will be vs code.

Debugging, ... huh.. I have no idea.. I will try in code that.

Once again:

`rust`, `cargo-binutils`, `cargo-edit`, `QEMU`, `gdb`

I will dive more on that:

https://code.visualstudio.com/docs/devcontainers/create-dev-container

Nice, I managed to do that!

It compiles, and it runs qemu!

Next, I'd to integrate GDB wit Code!

## 17.10

https://developer.arm.com/documentation/ddi0500/

## 19.10

trying to run GDB on that.

```

Remote debugging using localhost:8000

warning: while parsing target description (at line 1): Target description specified unknown architecture "aarch64"

```

I guess, I use wrong qemu? Oh, it seems that aarch64 is default gdb.

>Note from future: *no, you dummy head!* default gdb means your host architecture! ..unless you virtualize architecture also

>The Cortex-A53 processor implements the Armv8-A architecture. This includes:

> - Support for both AArch32 and AArch64 Execution states.

> - Support for all Exception levels, EL0, EL1, EL2, and EL3, in each execution state.

> - The A32 instruction set, previously called the Arm instruction set.

> - The T32 instruction set, previously called the Thumb instruction set.

> - The A64 instruction set.

>The Cortex-A53 processor **implements** the Armv8 **Debug architecture**.

The Cortex-A53 processor implements the ETMv4 (Embedded Trace Macrocell; whatever that means) architecture.

QEMU: `qemu-system-aarch64 -M raspi3b -kernel ./target/aarch64-unknown-none/debug/kernel -S -s`

-S for instant freeze, -s for defaut gdb on 1234 port

gdb: `target remote localhost:1234`

https://www.qemu.org/docs/master/system/arm/raspi.html

I want this:

https://developer.arm.com/documentation/dui0552/a/the-cortex-m3-processor/memory-model

But for Cortex A53:

https://developer.arm.com/documentation/ddi0500/j/Programmers-Model/About-the-programmers-model/Memory-model

no good

This is good:

https://developer.arm.com/documentation/ddi0500/j/Preface/About-this-book/Additional-reading?lang=en

ARMv8 is back compatible with ARMv7 (https://developer.arm.com/documentation/den0024/a/ARMv8-A-Architecture-and-Processors/ARMv8-A?lang=en)

Exception Levels:

- EL0 - for applications

- EL1 - for kernles

- EL2 - for hypervisors

- EL3 - for monitor (whatever that is)

- [ ] REad this:

- [ ] file:///home/tad1/Downloads/Armv8-A%20memory%20model%20guide.pdf

- [ ] https://developer.arm.com/documentation/101811/0103/Overview

Arm have training site;

But damn! They fucking manually check every created account!

![[Pasted image 20231020011133.png]]

Nah, nevermind I had to wait 10 seconds.

Nevermind, it costs 99$ per year.

I actually am going to give up..

to give up on using only the documentation.

https://github.com/rust-embedded/rust-raspberrypi-OS-tutorials/blob/53074bb82244d912fa85af51d92b17d829692fad/01_wait_forever/src/main.rs

Like this gimmes me far more information, on this specific field.

It's my fist encounter into embedded rust, so I'm taking it easy.

## 20.10

(2 hours)

I looked at that, and actually I might come back to embedonomicon.

First, I need to study Memory Model

SSE - Simple Sequence Execution

a mode of CPU, that forces to execture instruction by instruciton (removes parallerism)

There are 2 concepts: instruction ordering, and memory ordering.

memory ordering, is about caching, and reading before write (etc.)

2 memory types:

- Normal and Device

it's information for processor, on how to interact with address region.

**Normal Memory**

It's everything that bechaves as memory (like RAM, Flash or ROM)

"Code should only be placed in locations marked as Normal"

we know the examples of adding code into mouse device memory

Normal Memory don't have side effect on access

What processor might do on access?

- Merge access (batch writes, persist only last write)

- Access data without being explicitly told (i.e. for prefetching)

- Change order of access (processor might change order of memory opreration for more effectiently)

So, the processor has a freedom for optimizing

ended on 14 page

file:///home/tad1/Downloads/Armv8-A%20memory%20model%20guide.pdf

Barrier instructions are used to enforce ordering.

So, there are regions that I need to set.

For devices I need to set them to Device memory and as non-executable

Device memory types: Device_[n]G[n]R[n]E

(no) Gathering, (no) Re-ordering, (no) Early Write Acknowlege

Gathering is merging multiple accesses into a single bus transaction.

MAIR_ELx (Memory Attribute Indirection Register) is used for memory type.

The entry about memory type has 8 bits. Yet the translation table bytes are limited. Therefore they used 3bits to encode position in `MAIR_ELx`, so you can restore those 8 bits.

So, that means you can have max 8 different memory types.

Permission attributes:

| AP | Unprivileged (EL0) | Privileged (EL1/2/3) |

| --- | ------------------ | -------------------- |

| 00 | No access |Read/write |

|01 | Read/write| Read/write |

|10| No access | Read-only |

| 11| Read-only| Read-only|

`PSTATE.PAN` bit, prevent to run unpriviliged region from EL1/2

so it disallow to execute application from kernel level.

Execution permission

`UXN` - User Execute Never

`PXN` - Priviliged Execute Never

![[address_map_arm.png]]

Access Flag

`AF=0` - region not accessed

`AF=1` - region accessed

Dirty state (armv8.1-A) - describes if

addresses must be aligned to instructions (for LDRH - load 16bits address must be 16-bits to be aligned)

instructions are little-endian

For processors that support both big-endian and little-endian, endianness is configured per Exception level.

Arm supports aliasing, but when you alias you need to set the same memory type/sub-type; same cacheability and shareability.

Virtual addres has 2 stages of translation

![[arm_address_translation.png]]

When the MMU is disabled, all accesses are treated as Device. Unaligned accesses to regions marked as Device always fault.

Ok! Finally found what I was looking for!

**Bare-metal Boot Code for ARMv8-A**

https://developer.arm.com/documentation/dai0527/latest/

"In AArch64, the processor starts execution from an IMPLEMENTAION-DEFINED address, which is defined by the hardware input pins **RVBARADDR** and can be read by the RVBAR_EL3 register. You must place boot code at this address."

Arm has special register which is used for GPR initialization `XZR` for 64bit and `WZR` for 32bit.

Zero Register is like /dev/null it gives you zero and everything what's written is discarded.

Each exception layer has it's own Stack Pointer

I see, ARM has also System Coprocessor;

In System Coprocessor you define things like MMU, Cache, etc.

`MSR target, R`

My very fist task is to **find CPU boot address**, which is implementation specific; it's defined by hardware input pins.

## 24.10

(20 minutes)

This doesn't violatile rules, and it will be super usefull:

https://github.com/qemu/qemu/blob/a95260486aa7e78d7c7194eba65cf03311ad94ad/hw/arm/raspi.c#L130

reference to boot info structure:

https://github.com/qemu/qemu/blob/master/include/hw/arm/boot.h#L40

Actually the qemu source code is the best database for these kind of things!

## 07.11

(1 hour)

It was a long time... welp I need to come back with that project

Downloaded QEMU source, I will study this a bit untill I have a clear idea from what address it will boot.

Oh.. it was at the beginning of boot.c

```c

#define KERNEL_ARGS_ADDR 0x100

#define KERNEL_NOLOAD_ADDR 0x02000000

#define KERNEL_LOAD_ADDR 0x00010000

#define KERNEL64_LOAD_ADDR 0x00080000

```

Oh.. actually I found this `raspi.c`:

```c

#define SMPBOOT_ADDR 0x300 /* this should leave enough space for ATAGS */

#define MVBAR_ADDR 0x400 /* secure vectors */

#define BOARDSETUP_ADDR (MVBAR_ADDR + 0x20) /* board setup code */

#define FIRMWARE_ADDR_2 0x8000 /* Pi 2 loads kernel.img here by default */

#define FIRMWARE_ADDR_3 0x80000 /* Pi 3 loads kernel.img here by default */

#define SPINTABLE_ADDR 0xd8 /* Pi 3 bootloader spintable */

```

What the heck is spintable?

[Interesting](https://patchwork.kernel.org/project/linux-arm-kernel/patch/56b0c0f2834cba63db1f75966f2ee756ab347205.1399594544.git.geoff@infradead.org/)! we can kill the CPU?

amusing

![[cpu_die.png]]

https://www.kernel.org/doc/html/v4.18/core-api/cpu_hotplug.html#architecture-s-requirements

As far as I get, it's used for hotplug CPU

So, the spin-table appears to be an U-Boot thing.

*Das U-Boot ist eine Art Bootloader.*

Ok, it seems fucked up. After [reading](https://www.kernel.org/doc/Documentation/arm64/booting.txt) it seems that spin-table is a memory addres for CPUs to spin.

What the fuck?

Ok, first I needed to understand the term **spin**.

So when we load kernel, we use only one core.

The rest CPUs gets a special memory region where they need to spin.

So, finally the `0x80000` is the start address for the `kernel.img`.

Good news, there's a crate for `aarch64` CPU:

https://docs.rs/aarch64-cpu/9.4.0/aarch64_cpu/

Cortex-M-RT create has a `#[entry]` [macro](https://docs.rs/cortex-m-rt-macros/latest/src/cortex_m_rt_macros/lib.rs.html#18-114), it sets the entry point, and do all the things you'd have to do in linker file.

The [next chapter](https://docs.rust-embedded.org/book/start/hardware.html) has some question I'd like to answer:

- CPU: Quad-core ARM Cortex-A53.

- Yes, it has FPU

- 1 GB RAM, and variable sized SD Card.

- Where RAM is mapped in address space? Still no idea

We name creates:

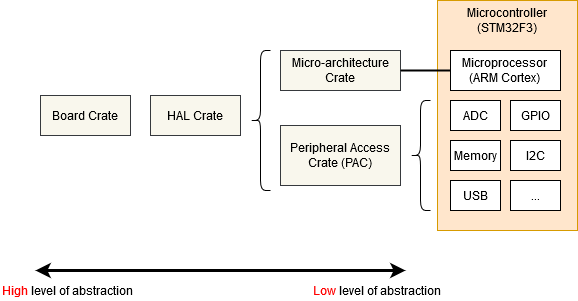

We have Micro-architecture Crate: [aarch64_cpu Crate](https://docs.rs/aarch64-cpu/9.4.0/aarch64_cpu/)

Oh damn!

I'm just stupid..

I forgot to add path to executable for GDB...

- [x] now, I'd like to build, run qemu and run gdb with already set remote

- [x] bring here those cargo tools

## 08.11

(1,5 hour)

cargo: `cargo build`

QEMU: `qemu-system-aarch64 -M raspi3b -kernel ./target/aarch64-unknown-none/debug/tad_os -S -s`

gdb: `aarch64-linux-gnu-gdb -ex "target remote :1234" -q ./target/aarch64-unknown-none/debug/tad_os`

Cargo utils:

`cargo size --bin tad_os`

```sh

cargo rustc -- --emit=obj

cargo nm -- /workspaces/tadOS/target/aarch64-unknown-none/debug/deps/tad_os-*.o | grep '[0-9]* [^N] '

```

ELF Headers:

`cargo readobj --bin tad_os -- --file-headers`

Linker Sections:

`cargo size --bin tad_os --release -- -A`

#### Linker Symbols

- `#[export_name = "foo"]` sets the symbol name to `foo`.

- `#[no_mangle]` means: use the function or variable name (not its full path) as its symbol name. `#[no_mangle] fn bar()` will produce a symbol named `bar`.

- `#[link_section = ".bar"]` places the symbol in a section named `.bar`.

reading:

https://sourceware.org/binutils/docs/ld/Scripts.html

https://docs.rust-embedded.org/embedonomicon/memory-layout.html

Ok, there's an explanation to the macro I found: https://docs.rust-embedded.org/embedonomicon/main.html

TODO:

- [x] Find out even more about linker settings I need to know

- [ ] create a launch configuration, to run everything (build, qemu and gdb)

##### Linker Scripting

https://sourceware.org/binutils/docs/ld/Basic-Script-Concepts.html

a section can be **loadable**, or **allocatable**, a section that's not loadable nor allocatable usually contain debug informations.

each loadable/allocatable section has 2 addresses: Virtual Memory Address (VMA) and Load Memory Address (LMA); usually they are both the same, but it's different when (for example) we want to initialize global variables in ROM.

Here's an example script:

```ld

SECTIONS

{

. = 0x10000;

.text : { *(.text) }

. = 0x8000000;

.data : { *(.data) }

.bss : { *(.bss) }

}

```

Code will be loaded in `0x10000`, and data would be at `0x8000000`

ended on: https://sourceware.org/binutils/docs/ld/Simple-Example.html

I call this as an success: ([2f3130f](https://github.com/tad1/tadOS/commit/2f3130f33c1c0ff7c7d9a53b8942e25a241dde36))

![[Pasted image 20231108194639.png]]

I wonder what will happen if I shift the address..

Still! Does the board reads the ELF metadata?

Like it should.. I think

![[Pasted image 20231108195452.png]]

I checked if entry is set:

![[Pasted image 20231108195739.png]]

Now, question does `ENTRY(label)` sets the entry point, or the offset of `.text`? Or both?

I mean: does offset of `.text` also shift the entry point, if no `ENTRY(label)` is specified?

## 09.11

- [x] find how to integrate gdb with VS Code and finish configuration

Integrated GDB with [Native Debug](https://marketplace.visualstudio.com/items?itemName=webfreak.debug)

I found interesting that all cores are executing the program.

Not the single core.. but all of them.

I need to investigate it.

## 10.11

I've been reading [about linux architecture](https://linux-kernel-labs.github.io/refs/heads/master/lectures/arch.html) for a while.

I need a plan of attack, what are the next steps

First, I should divide platform specific code with platform independed code.

I should not write my own drivers, I should find and use others drivers.

question: open source or proprietary drivers?

The OS will be single core.

I need to:

1. Load Kernel Image

2. Setup Memory Layout (MMU) and CPUs (including disabling the other cores)

3. Load all nessecarry code, setup interruptions

###### Configuring VS Code (30 minutes) [876e1e3](https://github.com/tad1/tadOS/commit/876e1e34f0a64915679f5811f7a025ad41f5572d)

I use [integrated GDB from WebFreak](https://marketplace.visualstudio.com/items?itemName=webfreak.debug)

and configure in `launch.json` to connect to remote gdbserver, and to load the kernel symbols.

I created configuration (`launch.json` and `tasks.json`) based on:

- https://github.com/microsoft/vscode/issues/90786

- https://github.com/microsoft/vscode/issues/90288

It builds kernel; launchs QEMU in background. Once we stop debugging the QEMU instance is killed.

## 11.11

(3 hours)

the better documentation of config.txt: https://gist.github.com/GrayJack/2b27cdaf9a6432da7c5d8017a1b99030

This is interesting, somehow after the first trap instruction (actually it don't have to be a trap instruction) I get into `0x200`.

What that address is for? Is that a clock? or perhaps an exception address?

There's an undefined instruction (`udf`) that generated undefined instruction exception.

(20 min)

First, the trap instruction is not executed on each core.

It's random.

reading https://developer.arm.com/documentation/den0024/a/Multi-core-processors

The processor is a Quatro Core processor.

There's a _Multi-Processor Affinity Register_ (MPIDR_EL1) that allows to determine on which core code is running.

> However I can't see it in GDB.

MIPDR_EL1 can be changed by VM to make an illusion of different cores. However the MIPDR_EL3 cannot be changed, and represent the physical core.

Ok, I found exactly what I needed that in docs:

https://cs140e.sergio.bz/docs/ARMv8-A-Programmer-Guide.pdf#G13.1218865

Excatly! Got it!

![[Pasted image 20231111155909.png]]

By default exception table is set on `0x0`. The offset `0x200` is a clock signal. There fore I need to fill this entry.

In ARM, we simply put the code to vector table.

Each entry (in aarch64) has size of 16 instructions.

It get's weird. The code don't execute, the CPU are staying at `0x200` address.

There's something more going on there.

I have an awesome idea!

Let's put some nops!

Ok! It appears that adding a variable to stack makes the exception!

Nice! I got it!

Finally! the infinity loop.

So, it may apprear that I need to initialize the stack..

Investigated, I cannot even modify the stack pointer register.

It appears that I need to enable something.

It is something with stack then.

So we are at EL3 by default (`ELR` holds return address for exception, it will be handy later).

![[Pasted image 20231111193243.png]]

And also, what happens is indeed an exception.

The content of `SPSR_EL3` is `0x400003cd`

So it appears that `

in GDB allows to access registers

![[Pasted image 20231111194130.png]]

I don't like that:

![[Pasted image 20231111195532.png]]

It's a displacement by 8 bits, aarch64 requires address alignment of 16 bits.

I suppose the problem is stack pointer being pointing to

![[Pasted image 20231111200758.png]]

It appears I'm short about 18 exabytes of RAM.

Found boot code from docs:

http://classweb.ece.umd.edu/enee447.S2021/baremetal_boot_code_for_ARMv8_A_processors.pdf

Looked at [source](https://github.com/OhadRau/rust-os/blob/master/boot/src/init/init.s) of one Raspberry PI OS in rust.

And indeed they just [embedded ASM](https://doc.rust-lang.org/rust-by-example/unsafe/asm.html).

That was actually easier than I though, stack is now working.

I only had to embed the ASM.

[6dae285](https://github.com/tad1/tadOS/commit/6dae285a31bd80a96e6a87f84315c093a6a440ec): Now, stack is initialized and we halt other cores

## 13.11

It appears that I will also write runtime for Cortex-A

Ok, so I will base my work on:

https://github.com/rust-embedded/cortex-m-rt/tree/master

The create **I will need**:

https://docs.rs/embedded-sdmmc/0.6.0/embedded_sdmmc/

https://raspberrypi.stackexchange.com/questions/64062/does-raspberry-pi-3-0-support-hdmi-2-0-standard

This is what I was looking for the looong time!

https://cs140e.sergio.bz/docs/BCM2837-ARM-Peripherals.pdf

https://docs.rs/bcm2837-lpa/0.2.1/bcm2837_lpa/

https://www.raspberrypi.com/documentation/computers/processors.html#bcm2837

BCM2837 has the same architecture as BCM2836, BCM2835

Ok, I actually see this pretty interesting, and escalating very quickly!

Remember [the tutorial that I discarded](https://github.com/rust-embedded/rust-raspberrypi-OS-tutorials)?

I realized 2 things.

First, it's made by rust-embedded team.

Second, the form of tutorial is an actually reading the code!

I will learn from others code how to write an OS!

[Here](https://github.com/rust-embedded/rust-raspberrypi-OS-tutorials/blob/master/01_wait_forever/src/bsp/raspberrypi/kernel.ld) is an interesting entry `PHDRS`, what it does?

That's very neat. It's abreviation of **P**rogram **H**ea**d**e**rs**.

"*The program headers describe how the program should be loaded into memory.*"

Like in code, we define a instruction that what we describe as a `segment_code`, should be readable and executable only.

###### [Next one](https://github.com/rust-embedded/rust-raspberrypi-OS-tutorials/tree/master/02_runtime_init)

I think, that I should get to know about `.rodata`, `.got`, and `.bss` section.

So, the guy tells that he initializes DRAM by zeroing the `.bss`

.bss is a block starting symbol

Here's a good source! https://wiki.osdev.org/ELF

Actually, it's lacking in sections [here's](https://refspecs.linuxbase.org/elf/gabi4+/ch4.sheader.html) a better one.

`.bss` - simply uninitialized data, in `.data` therefore is initialized data

`.rodata` - read-only data, I guess that here are const chars stored

`.got` - global offset table! that's an interesting one! it's used in dynamic linking, but have no idea what the fuck is going on!

Oh, we just make an assertion that we don't reallocate data.

That's interesting, we use lower addresses for stack.

The kernel is loaded at `0x8000`, I wonder if that's a design choice.

`#[inline(always)]` - nice, we have that; it's a strong suggestion, I wonder what edge cases are for that.

[found](https://users.rust-lang.org/t/inline-functions-with-enable-feature/29041), it appears that compiler will yell at you

neat! we can refference to root of crate with `crate::something`

macros! of course! we have that.

`lo12` lol? Oh, it's not a LOL2, but it's Lower 12 bits of symbol in assembly!

Oh!... yes

https://sourceware.org/binutils/docs-2.36/as/AArch64_002dRelocations.html

it is about loading the relative address! the address translation!

We have 2 instructions: `MRS` (Move to Register from Special Register) and `MSR` (Move to Special Register from Register)

`b.ne label`

`stp`, this took me a quite while.

```arm-asm

//increase x0 after accessing (Post index)

STP st1, st2, [x0], #imm

//increase x0 before acessing (Pre index)

STP st1, st2, [x0, #imm]

```

## 16.11

Last day, I had a problem with [second tutorial](https://github.com/rust-embedded/rust-raspberrypi-OS-tutorials/tree/master/02_runtime_init), the problem with linker symbols, I am checking if it would work on original project.

And if it's the problem that I would need to use

It does compile on nightly version.

I would also check what will happen if I would set the [rust-toolchain.toml](https://rust-lang.github.io/rustup/overrides.html) file to the same as in example.

It is not problem of rustc version.

Is it a target problem?

No.

I need to check, somewhere here is a problem:

```sh

rustc --crate-name tad_os --edition=2021 src/main.rs --error-format=json --json=diagnostic-rendered-ansi,artifacts,future-incompat --crate-type bin --emit=dep-info,link -C panic=abort -C embed-bitcode=no -C debuginfo=2 --cfg 'feature="default"' -C metadata=62789bdc7aa160c0 -C extra-filename=-62789bdc7aa160c0 --out-dir /workspaces/tadOS/target/aarch64-unknown-none-softfloat/debug/deps --target aarch64-unknown-none-softfloat -C incremental=/workspaces/tadOS/target/aarch64-unknown-none-softfloat/debug/incremental -L dependency=/workspaces/tadOS/target/aarch64-unknown-none-softfloat/debug/deps -L dependency=/workspaces/tadOS/target/debug/deps --extern aarch64_cpu=/workspaces/tadOS/target/aarch64-unknown-none-softfloat/debug/deps/libaarch64_cpu-c894ffc3ce448945.rlib

```

```cmake

RUSTFLAGS = $(RUSTC_MISC_ARGS) \

-C link-arg=--library-path=$(LD_SCRIPT_PATH) \

-C link-arg=--script=$(KERNEL_LINKER_SCRIPT)

```

This is what I believe I'm missing.

Still, don't work

## 17.11

And it appears that it was what I was missing.

Fist, setting feature would fix `BOOT_CODE_ID`

And setting a linker args handle `__bss_end_exclusive` and `__boot_core_stack_end_exclusive`.

I managed to do that, finally I got unstuck, and finished the second tutorial!

The next tutorials, I will do different.

I will analyze, and play with the code, and I will note what's is needed to be done.

Instead of rewriting someone code, and learning those bits.

##### [Tutorial 03](https://github.com/rust-embedded/rust-raspberrypi-OS-tutorials/tree/master/03_hacky_hello_world)

sources to check:

- [x] `src/console.rs` (interface)

- [x] `src/bsp/raspberrypi/console.rs` (implementation)

- [x] `main.rs`

```rust

#[macro_export]

macro_rules! print {

($($arg:tt)*) => ($crate::print::_print(format_args!($($arg)*)));

}

```

This is kind of magic. What the heck is going on here?

So, this macro comes from [std crate](https://doc.rust-lang.org/src/std/macros.rs.html).

Oh, ok. It's just a standard macro syntax https://doc.rust-lang.org/rust-by-example/macros.html

https://doc.rust-lang.org/book/ch19-06-macros.html

We take zero or more tree-token (`:tt`) arguments.

and just exectue the \_print

So, it looks that I need to:

- [x] create an module for console, that uses `Write`

- [x] a function that will return implementation-specific console.

- [x] that implementation specific console (struct with trait) would use Qemu Address (`0x3F20_1000`) that allows for byte-per-byte printing (implement the write_str)

- [x] a print module, that will expose `print!` and `println!` macros.

- [x] now, main will print, and panic

- [x] the panic handler would take location arguments, and would print the source (using the `println`): 'File', 'line', 'column'

- [x] also panic handler would prevent multiple panics; simply by using static atomicBool, either trap CPU inside, or allow the CPU to return from function

I don't feel that I'm learning anything.

I feels more like copy paste the code, with a little understanding.

Something is wrong.

I will continue, in the same manner

## 18.11

[today's tutorial](https://github.com/rust-embedded/rust-raspberrypi-OS-tutorials/tree/master/04_safe_globals)

I will try to approach it in a different manner.

Understand the problem first, and how rust handles it.

the code is a solution. I will just compare with mine later.

The problem is Rust don't like global mutable variables. Because of borrow-checker principle that either multiple immutable references exists, or only one mutable reference.

In std, there's a `Cell<T>` for single thread on `Mutex<T>` for multiple threads.

There's an concept of **interior mutability**, an interior mutability means mutable via `&T` reference, opposed to **inherited mutability** - mutable only via `&mut T` reference.

[This article](https://docs.rs/dtolnay/latest/dtolnay/macro._02__reference_types.html) is just awesome! I'm getting deeper! Yay!

Once we got into that deeper level `&` and `&mut` are no longer immutable, and mutable references.

No, instead we refer to more accurate names, that explain more mechanisms of references, instead of it's effects.

We call `&` a **shared reference**, and `&mut` **exclusive reference**.

We can't have shared and exclusive references, because exclusive reference assumes there's no other references. This includes references on other threads.

"Keep in mind that data behind a shared reference may also be mutable sometimes"

There's a trait `Send` for types that can be transferred across threads.

And `Sync` for types where it's safe to share references between threads.

Is there a special `?` syntax to remove traits? No, it's something different.

Done, I guess.

I understood, the problem, looked at implementations, learned something. But I still transcribed the code.

Yeah, the entry curve is high. Once I started implementing, it was quite right, but I still was missing the trait part (and the interface part) for my approach.

## 20.11

Oh boy!

It's time for [UART](https://github.com/rust-embedded/rust-raspberrypi-OS-tutorials/tree/master/05_drivers_gpio_uart)! The real one!

*doom 2016 soundtrack is playing in background*

Drivers! I see that they use same idea as in linux kernel.

We provide a common interface for drivers methods like `driver_init()`

MMIO - memory mapped I/O (forgot about that)

WTF is PhantomData in rust?

Oh, actually **it was** in Rustonomicon. [Here](https://doc.rust-lang.org/nomicon/phantom-data.html).

And yeah that was one of the thing I haven't fully figured out.

As I understand, it's a zero sized type (yes, the typical rust)

That you explicit add, to make compiler understand better your intentions.

It is useful, when you work with raw pointers (unsafe Rust). [See an example](https://doc.rust-lang.org/std/marker/struct.PhantomData.html#examples)

So, in MMIO we store address of device. And instead of just doing that, we would like to ensure strong typing in Rust. [This example in docs](https://doc.rust-lang.org/std/marker/struct.PhantomData.html#unused-type-parameters)

Indeed rust really requires you to change way of thinking.

Lolz

We have a `yeet` operator in rust!

https://doc.rust-lang.org/core/ops/struct.Yeet.html

https://lobste.rs/s/3i5r6d/are_we_yeet_yet

![[Pasted image 20231120185459.png]]

I fucking love that idea!

`git yeet, git yoink`

I'm adding that to my git configuration!

Done ([0fc9936](https://github.com/tad1/tadOS/commit/0fc9936b4d4fa7abea9e9595bfb88e2f7792f479)):

![[Pasted image 20231120185955.png]]

Anyway I was looking at https://doc.rust-lang.org/core/ops/ figuring out what that module was doing.

Unsafe rust is very neat in terms of how we apply strong typing.

to summary

##### MMIO

basically it's a I/O device that has assigned physical memory address.

In rust we can create a low cost (maybe even zero-cost; I don't know) abstraction for any MMIO device that provides strong typing and rust safety.

To entry itself we inject the zero-sized type information by using phantom data.

We also define `Deref` trait (the `*var` operator) for all devices.

##### Continued

*doom soundtrack intensive*

I got curios, and actually started reading source before finishing the readme. 'Tis a great experience.

I will do the interface today, and tomorrow the implementation.

## 21.11

Time to finish UART

*doom music continues (in 1993 style)*

Now, I first make a detailed note, what visibility, what returns trait. What's the important note about, and then I write code.

There's a create we are using: https://crates.io/crates/tock-registers

It allows to add types and memory safety for registers.

GPIO implemented (mapping to UART)

I just copied UART registers definitions.

There's no value in spending time once again rewriting values from docs.

I actually copied all definitions.

It would be just coding.

I actually yoinked all remaining code.

Like why would I write that?

I think, I made this because I'm running out of the time.

It took me a while to figure out that I need to convert ELF output to image. Ekhem..

It's fucking working!!!!!

Let's fucking go!

This is my setup.

![[IMG_6349.jpg]]

(I did not had any f2f cable)

And this is the beautiful output:

![[Pasted image 20231121192140.png]]

Oh.. actually we can do this better

![[Pasted image 20231121193913.png]]

perfect!

## 26.11

Managed to find some time to continue on this project.

Right now studying and copying the [chainloader tutorial (06)](https://github.com/rust-embedded/rust-raspberrypi-OS-tutorials/tree/master/06_uart_chainloader)

Done, but there's still a problem

## 01.12

The problem is with transmission.

I changed the speed from 921 600 to 115 200.

The error of speed got reduced, and the raspberry reads twice more bytes.

## 02.12

Friend told me that default baud rate is 9600. I checked it.

And yeah for 48 MHz that's great. 0% Error. Should work

But it's also slow.

For my raspberry UART theoretically can be 300 time faster.

It works slow as fuck.. but it works

![[Pasted image 20231202132843.png]]

The problem is baud rates that we can produce on PC.

Libraries use table of standard rates it appears:

https://github.com/hparra/ruby-serialport/blob/53e396d0f8894a77260e9c76c3a71f8cc2bbacdf/ext/native/posix_serialport_impl.c#L267

https://github.com/raspberrypi/linux/issues/4453

but for now, this rate is good enough.

Ok, managed to finish that,

it appeared that I had a typo in boot.s, fixed in [91d6065](https://github.com/tad1/tadOS/commit/91d606518046d1a993a9b25913d4cf43dd37ed39)

It's working now

![[Pasted image 20231202173709.png]]

## 03.12

Time for a JTAG!

...

oh my! Why this thing cost so much?

https://www.olimex.com/Products/ARM/JTAG/ARM-USB-TINY-H/

Do I need a special external debugger for that?

Can I make it myself? Would that cost less?

I could buy that, and get much more into the embedded programming.

But that would mean, the Rust-Roadmap would require a costly hardware.

(welp, I actually have the Raspberry Pi)

but I have a specific idea in mind.

Can this be done by software?

With a bare minimum of hardware? Yet a big overhead by making everything in software.

reading:

https://www.xjtag.com/about-jtag/jtag-a-technical-overview/

Guess what!

I ordered that fucking JTAG interface!

Why? Because there will be more to explore on embedded rust.

And that will be a glorious tool to have.

Getting on another level of understanding every bit in CPU.

Acquiring the hardware, and the ability to test thing out on fly in real hardware.

## 07.12

I got a delivery!

![[6E1F53ED-16CD-40CF-90E9-D2EA1EC9A147.jpeg|600]]

There it is! A JTAG!

![[6C78B9DA-FA72-413A-8B3B-C53BC677B8AD.jpeg]]

They is a funny paper thing, instead of bouble foil.

## 11.12

I'm back in project.

From [BCM2837-ARM-Peripherals](https://cs140e.sergio.bz/docs/BCM2837-ARM-Peripherals.pdf):

There's something called External Mass Media Controller (EMMC)

I read the documentation, and must say. It's decent.

All of needed informations are there.

I take it as an challenge, to finally put my hands dirty in implementing the module on my own.

To keep it short, all notes about SD Card Interface are [[SD Card Interace|here]].

I found source code that already does it. I will use that.

But I do need to rewrite that, instead of copy-paste.

## 13.12

Time to use that driver, now the abstraction should not depend on concrete instances.

That having said, the interface should not depend on driver, but rather driver should depend on interface.

## 14.12

It doesn't work.

Welp, It appears that it's failing on card initialization.

Ok, it appears that the code I'm using somehow changes the EMMC driver speed, and everything breaks.

Having a stable 40KHz makes everything work correctly.

However, there's still a problem.

The crate don't work!

Like it halts somewhere.

I still have heck no idea on how to setup GDB to work with Rust and JTAG.

I'm using stripped ELF for kernel images, so I suspect that there are no debug symbols in that.

Either I don't know how to emulate SD Card on QEMU, the only one thing is left: `print-f debug`

Ok, I yoinked even more code from [this repo](https://github.com/nihalpasham/rustBoot/) (the entire file system)

and found, that there's still the same problem.

It get into an infinite loop somewhere.

But this time, I'm able to localize, and repair it.

Ok, this fails:

```rust

let lba_start = read_u32(

&partition[LBA_START_INDEX..(LBA_START_INDEX + 4)],);

```

Also, the bad news for me: the cargo crates metadata just broke.

Everything is red now.

Ok, `cargo clean` is super-effective for that.

## 15.12

I'd like to enable sd card on host.

To do that, I'd to set `-device sd-card,drive=mydrive -drive id=mydrive,if=none,format=raw,file=image.bin`

Where I have to create image with `dd`

`> dd if=/dev/mmcblk1 of=<pathToStore>` (`lsblk` to find it)

And it might work then.

But the more useful would be having GDB on hardware.

Yeah, let's just make it working correctly on hardware with all needed symbols.

https://metebalci.com/blog/bare-metal-raspberry-pi-3b-jtag

https://www.suse.com/c/debugging-raspberry-pi-3-with-jtag/

A thing that I may to try first: remove all code optimalization.

...

**oh, fuck me!**

the library I wanted to use tried to use FPU!

I don't have enabled FPU!

Changing target from `aarch64-unknown-none` to `aarch64-unknown-none-softfloat` solved my problems.

Now, will `embedded-sdmmc` work on this target platform?

Yes! It does!

Nice, no problem with printing the file!

![[Pasted image 20231215181059.png]]

Now the fun begins.

Suppose we use printf, how it's done that we call system function?

I wrote a simple C program:

```c

#include<stdio.h>

int main() {

printf("Hello there!");

return 0;

}

```

Found interesting tool: https://github.com/ruslashev/elfcat

It allows to generate HTML file like this:

![[Pasted image 20231215184328.png]]

Also a very good ELF reference: https://man.archlinux.org/man/elf.5.en

as always arch has the best wiki

I `ctrl-f` that and found that printf is imported from glibc.so library.

So, it would mean that we need a loader for ELF binaries.

In linux we have `ld-linux.so`, it's loaded with OS (I guess)

and it's depended on all linux platforms.

So, there's a `-static` flag in `ld` and `gcc`, what it does, is to embed library into single elf (that don't require dynamic loading)

So, it's simple as copy-paste.

But how actually printf looks like?

Oh, yeah they just use streams, how convenient.

We can create a library, with addresses to functions, but It's fucking unreasonable to do such a thing...

Also, we can define kernel interrupts.

Or create a statically loaded library with all functions.

Fuck this!

Let's create a Big Fucking Table (BFT)

The first things I'd like to make is shell.

That can run binaries, from `/bin` directory (from SD card)

and display beautiful tadOS logo in center of screen

I tested that already, we can send beeps, with UART, because it's console on PC that's interpreting data.

Ok, I decided to make it in easiest, and easily to extend manner.

Implementing the `syscall`.

Either by setting vector table, or static memory address.

But first I need to define API.

This is (I think) a good approach:

https://github.com/elichai/syscalls-rs/blob/master/src/arch/i686.rs

Actually fuck this!

I have an idea, just let's make the common segment for all binaries.

It will be the easiests for later.

Like, since we deal with a linear address (no virtual), I can just embed into linker a section with a certain code.

The Kernel. And I shall strip that... I guess..

but then I have to rebuild everything when moving functions.

https://developer.arm.com/documentation/den0024/a/AArch64-Exception-Handling/Synchronous-and-asynchronous-exceptions/System-calls

`SVC`

So I need to populate the vector table located at `VBAR_ELn` + 0_offset

And I have 128 bytes.

SVC vs HVC vs SMC

the difference is the Exception level:

SVC - Supervisor (EL1 - Kernel)

HVC - Hypervisor (EL2)

SMC - Monitor (EL3)

ERET - return from exception

PSTATE - restore state?

this is interesting:

![[Pasted image 20231216004230.png]]

and considering that:

https://stackoverflow.com/questions/45107469/change-arm-mode-to-system-mode-cpsr-on-armv8-a

I'm assuming that I start in a hypervisor mode.

This resource I found eariel is helpful:

https://cs140e.sergio.bz/docs/ARMv8-A-Programmer-Guide.pdf#G13.1218865

HVC

I need to: initialize VBAR_EL2, ELR_EL2

get address of Rust function to handle command

populate [VBAR_EL1]+0x0 with code that

save address of rust function (either write to address, or return in register)

return back

Had to replace MCR functions with msr

https://stackoverflow.com/questions/32374599/mcr-and-mrc-does-not-exist-on-aarch64

So, once again I'm using this great repo:

https://github.com/nihalpasham/rustBoot/blob/main/boards/hal/src/rpi/rpi4/exception/exception.s

## 16.12

Realized that ... has tutorials about exception, and privilige levels.

Ok, found it! In QEMU EL3 is forced by this line.

![[Pasted image 20231216155851.png]]

It took me like 6 hours to make this.

Every fucking version of QEMU had a problem.

Either my GCC compiler got smarter and said: "no, no no!" (had to add `--disable-werror` to QEMU's `./configure`) or I got too new libbpf which did not had function that was required (unsolved to this day).

Found uppon a countless code that made the same: in case of EL3 exception, set it back to EL2.

Ultimately by joining this snipped from [here](https://github.com/bztsrc/raspi3-tutorial/blob/master/0F_executionlevel/start.S) (the original raspi3 OS tutorial):

```arm-asm

// Detecting if we are on EL3

mrs x0, CurrentEL

and x0, x0, #12 // clear reserved bits

// running at EL3?

cmp x0, #12

b.eq .L_EL3_to_EL2

```

And this for handling the actual transition from EL3 to EL2 ([from docs](https://developer.arm.com/documentation/dai0527/a/)):

```arm-asm

.L_EL3_to_EL2:

// Initialize SCTLR_EL2 and HCR_EL2 to save values before entering EL2.

MSR SCTLR_EL2, XZR

MSR HCR_EL2, XZR

// Determine the EL2 Execution state.

MRS X0, SCR_EL3

ORR X0, X0, #(1<<10) // RW EL2 Execution state is AArch64.

ORR X0, X0, #(1<<0) // NS EL1 is Non-secure world.

MSR SCR_EL3, x0

MOV X0, #0b01001 // DAIF=0000

MSR SPSR_EL3, X0 // M[4:0]=01001 EL2h must match SCR_EL3.RW

// Determine EL2 entry.

ADR X0, .el2_entry // el2_entry points to the first instruction of

MSR ELR_EL3, X0 // EL2 code.

ERET

```

It fucking works!

The emulation!

Finally I have debugger!

This is comprehensive ([source](https://developer.arm.com/documentation/ddi0487/ja/) - page 5358):

![[Pasted image 20231217013523.png]]

Actuall also you can read this: https://github.com/rust-embedded/rust-raspberrypi-OS-tutorials/tree/master/11_exceptions_part1_groundwork

PEW! I managed to create an kernel_gate

How it works? Basically I handle exception if it's syndrome is set to `x666`, if so I write into `x0` saved register address of Rust function.

This function is a kernel_call function.

Basically I exposed some of it's functionality to via this function.

Like `WriteChar`, `Spin`, `ReadBlock`

To make it easy to manage the function recieve 2 paramters:

`arg` and `resp`

Where depending on request `arg` is a value or pointer to struct.

For `Spin` arg is time in millisecond; `WriteChar` arg is `u8` as `u64`.

`ReadChar` arg is address to `u8`; and arg of `ReadBlock` is a structure to Blocks address (`&mut [Block]`), first block index, and reason.

`resp` is for now reserved, mainly to split arguments and return, but it does require additional jumps (i might reconsider that)

Yet, it's still just a temporal solution, just to complete the part of College Project.

`kernel_call` is finished and tested.

Now, it's a time to finish the main goal for this College Project, which is stated as:

"Make a Kernel (microOS) in Rust that can load ELF binary"

I found good references here:

https://refspecs.linuxfoundation.org/elf/gabi4+/ch4.eheader.html

https://refspecs.linuxbase.org/elf/gabi4+/ch5.pheader.html

https://sites.uclouvain.be/SystInfo/usr/include/elf.h.html

- [ ] TODO: insert link to 101 kernel image

Done, but it needs testing.

But to test that, I need to get file from sdcard...

So, I need a console, a shell that will based on input load executable.

But here's a problem, to test if that executable will work I need to load the shell first.

Also another problem is with making 2 programs running, at the current state the programist needs to explicit set program start address. To avoid conflicts.

So I decided to integrate shell with kernel main program.

A kernel level shell. Like what OS do have such a thing?

So, it's a bit late now, and I'm messing up with escape codes.

The nice thing is that all modern consoles have a full support of ANSI escape codes (speaking about Unix of course).

I added backspace (it's just `\b`, `' '`, `\b` sequence), a `ctrl+backspace`.

And tab for hinting (autocompletion not yet implemented)

for that I'd like to add `zsh` like autocompletion

Ok, got it; now the `embedded_sdmmc` to open a file needs a string (with max 11 characters - the FAT short name)

I store currently written command in `[u8;11]` buffer.

Now I'm wonder how to convert slice of u8 to string...

OH, the rust source code came to help!

![[Pasted image 20231218002631.png]]

It's just a `transmute`

And no need to null terminate string. Neat

Oh boy! It's freaking working!

- [ ] insert image

# v1.1

## 29.02

I decided to go back to this project.

with a main goal to [[Rust|learn Rust]] better.

The final goal of tadOSv1 is to make it run DOOM, via HDMI, Keyboard and with music on micro JACK.

Currently the kernel itself it capable of running doom.

However; problem is with debugging programs for kernel.

And not only that, but also sending programs via UART/USB/Ethernet (the remote execution) would speed up the development process.

To sum up, current goals:

- Approximate what would be a good medium for sending programs (target goal 10MB/s)

- If needed, buy converter

- If needed, implement driver for new medium

- Allow to send command via UART from VS Code scripts

- Design remote execution in kernel

- Design debugging in kernel

- Rewrite DOOM in no_std rust (as writing there's no such project on GitHub)

## 01.03

[CP2102](https://files.waveshare.com/upload/8/81/CP2102.pdf) has max speed of 12Mbps

[FT232](https://www.waveshare.com/wiki/FT232_USB_UART_Board_(type_A)) allows for max 3Mbps

Ethernet requires to implement the network stack.

I guess that it leaves me with USB.

https://www.eevblog.com/forum/microcontrollers/complexity-of-usb-stack-vs-tcpip-stack/

There's a crate for that..

https://github.com/rust-embedded-community/usb-device

I might want to read more about rust, after all I still don't comprehend trait system in depth.

https://doc.rust-lang.org/book/ch17-02-trait-objects.html

https://doc.rust-lang.org/book/ch19-03-advanced-traits.html

I can also use WiFi for transmission

## 22.03

I found this:

https://www.khronos.org/api/opensles

https://www.openal.org/

## 14.09

I'm back.

I watched *Bocchi the Rock!*, <small>(I recommend it to all fellow musicians)</small>. I have a damn urge to sink into small world where I can tinker and improve my craft for 3 years. And become good at it.

This project is exactly such an enviroment.

My first impression after getting back to the code base?

- **Why the fuck devcontainter crushes?**

updated dockerfile, to handle pacman keyring problems

- **Why the fuck I can't `git log`/`git branch`?**

added `less` package

- **Why the fuck I get errors?? it worked previously!**

tried to `cargo build` from command line, instead of `F5`. Forgot to add `--features bsp_rpi3` in CLI.

- **Why the fuck I got a wall of warrnings and even more errors?**

breaking changes in Rust (I use nightly), a lot of experimental stuff got to the stable release since last time.

([6b5aed8](https://github.com/tad1/tadOS/commit/6b5aed87068f6e2a9cde84104cc744c465cb9015), [06cfc34](https://github.com/tad1/tadOS/commit/06cfc34c87ca65ef302f6d529352c79ae699c188)) Now it compiles and works.

Programs can take more than minute to load. I added perf to loading elf.

![[Pasted image 20240914225721.png]]

And the code if fucking ridiculus. In loop we copy next 32bytes and write byte-per-byte into selected address. Increased reading buffer to 512 bytes (block size).

We got 10x boost. Quick win.

![[Pasted image 20240914232734.png]]

Raspberry Pi 3B use HDMI 1.3a. This is the only one *intended* integraged video output. [I already implemented VGA display](https://github.com/tad1/pg_sem6_isp/blob/master/lab8a/lab8a.srcs/sources_1/imports/sources_1/new/transmitter.vhd) in VHDL, and I could redo that via GPIO pins. But I'll go straight into learning HDMI.

HDMI is proprietary. And specs are bechind membership and verification... **except for HDMI 1.3a**. I got specs from https://www.hdmi.org/spec/index (only 270 pages). I'll be reading it for the next few days.

Ok, I've found only chapter 3, and image at 5.1.1 interesting. Specs are for people who create HDMI interfaces/cables.

I was searching [BCM2837 ARM Peripherals spec](BCM2837 ARM Peripherals) with `video`, `gpu`, `HDMI` keywords. I found that they have custom integrated GPU - VideoCore IV

Starting physical address: `0xC0000000`

peripherals physical address: `0x3F000000` - `0x3FFFFFFF`

physical address `0x3Fnnnnnn` -> buss address `0x7Ennnnnn`

"Software accessing peripherals using the DMA engines must use bus addresses."

There are 2 access modes: *direct* and *using DMA*

I have no fucking idea what's buss address is.

Ok, this image is good. So we have 2 MMUs, first is in CPU (virtual - physical memory) , and the second one (buss-physical) that's a bridge between VideoCore and ARM.

![[Pasted image 20240915030326.png]]

<center>(page 5 - <a href="https://cs140e.sergio.bz/docs/BCM2837-ARM-Peripherals.pdf">BCM2837 ARM Peripherals</a>)</center>

They fucking use prefixes in address for caching modes!

I like it!

I found nice refference (it's in Polish, but it's the most detailed info I could find): https://sklep.avt.pl/data/include/cms/mvs/attachments/rasp0008.pdf

(I might translate, or create a new one)

There's a project on reverse-engineering the VideoCore and creating documentation: https://github.com/hermanhermitage/videocoreiv/wiki/VideoCore-IV---BCM2835-Overview

## 15.09

How do we draw on HDMI screen?

BSC/SPI slave base address: `0x7E21_4000`

At `0x30` offset, there's `GPUSTAT` register.

I guess, I'd need to look into DMA (Direct Memory Access) part. The concept that CPU and peripherial communicate via shared memory.

We have 16 DMA channels. To each a single operation is described as *Controll Block*, and it's a linked list so we can upfront tell sequence of operations. It supports AXI read burst (basically: give me next 52 blocks sequentially - type'o request).

A DMA peripherials generate *Data Request* (DREQ) signal. *DREQ controlls the DMA by gating it's AXI bus request*. Ok, that explain a bit existence of AXI. A peripherials can also generate *panic signal*. "**The allocation of peripherals to DMA channels is programmable**". We don't need to worry much about data alignment. "**Each DMA channel can be fully disabled via a top level power register to save power.**"

DMA Channel 0 address: `0x7E007000`

DMA Channel 1 address: `0x7E007100`

DMA Channell offset: `0x100`

DMA Channel 15 address (this one have different base): `0x7EE05000`

`CS`, `CONBLK_AD`, `DEBUG` registers have write access.

other are automatically loaded from *Controll Block*

Controll Block structure:

| Word Offset | Description | r-o register |

| ----------- | --------------------------- | ------------ |

| 0 | Transfer Information | TI |

| 1 | Source Address | SOURCE_AD |

| 2 | Destination Address | DEST_AD |

| 3 | Transfer Lenght | TXFR_LEN |

| 4 | 2D Mode Stride | STRIDE |

| 5 | Next Controll Block Address | NEXTCONBK |

| 6 | Reserved | N/A |

| 7 | Reserved | N/A |

The the CB must be 256-bit aligned. We terminate list with `0x0000_0000` null value. Once DMA transfer finished `ACTIVE` bit is cleared. Tips and tricks, we can read `TXFR_LEN` to get into about current progress; we can override `NEXTCONBK`, but it's safe only when DMA is paused.

Finished page 61.

## 17.09

We have 2 types of peripherals here: AXI and APB.

"**What the heck is AXI, and APB?**"

found this: http://verificationexcellence.in/amba-bus-architecture/

Understood like 30% of that, but I get the idea. This stuff is used when you design a chip. AMBA is an umbrella, a set of specifications (AHB, ASB, APB, etc.). The thing that shares across these are the [4 rules/promises/design principles of AMBA](https://en.wikipedia.org/wiki/Advanced_Microcontroller_Bus_Architecture#Design_principles). They designed busses/interfaces with specyfic traits in mind:

- APB is a very simple design, that's great for low-bandwitch

- AXI provide high throughput with small latency

- and more

Over time we got more issues/generations (AMBA 2, AMBA 3,... AMBA 5). Where each issue adds new/update previous designs.

Oh, and that stuff is open specyfication maintained by ARM. Wikipedia says: because it was for free, and damn good documented - everyone started using it; it become an industry standard.

## 18.09

**How do we send bytes to HDMI?**

I freaking love that I can write programs for the OS. I've written [memdisp](https://github.com/tad1/tadOSv0.1-Utils/tree/memdisp) program. So now I can lurk around without JTAG. *As I'm writing this, I'd probably would write a full-suite memory view program*. For QoL I'd write integration between tadOS and visual studio, so I can transfer programs. Or even run them with example input.

currently all DMA registers are 0s (and yes, I checked it, `memdisp` does read bytes correctly)

![[Pasted image 20240918022129.png]]

<center>(all 14 DMA registers)</center>

global enable register offset:`0xff0`

phyical address: `0x7E007FF0`

all zeros, all DMA engines are disabled

![[Pasted image 20240918022725.png]]

There's [embedded_dma](https://docs.rs/embedded-dma/latest/embedded_dma/) from embedded rust team. It's a collection of traits. There's more like `embedded_io`. I will create raspberry PI DMA interface them. The tadOS needs to handle DMA engines.

## 24.09

I need HDMI Display to test stuff. And actually display that I'll use later. Something cheap, and small/medium size. The best kind would be a monitor where storing it for years is a shit, no one buys it; and in most cases dealers hot-potatoe it.

I spend like 2 hours looking for monitor. I found and bought online: used Lenovo D22-20 for a little more than 10$.

## 28.09

It arrived!

![[IMG_6592.jpg|500]]

I'll check if it even works.

Oh no!

![[IMG_6594.jpg|400]]

It speaks German!

Anyway, HDMI does work.

![[image.jpg|800]]

I won't cry if I break it. It doesn't have a stand, I guess I will make it a low standing monitor.

Oh boy! Something unexpected happened. The HDMI works, it display broken RGB triangle (it's a square)

(insert rgb image here)

https://raspberrypi.stackexchange.com/questions/19354/raspberry-pi-with-boots-up-with-rainbow-screen

Ok, it's a part of firmware boot process. I guess I could poke into their code, so I'll know how to display stuff.

https://github.com/raspberrypi/firmware

There are overlays, but those tell what hardware we want to turn on, off during the boot. I checked the [config.txt reference](https://gist.github.com/GrayJack/2b27cdaf9a6432da7c5d8017a1b99030) there's a keyword framebuffer used.

I found an course on OSDev on raspberry pi:

https://www.cl.cam.ac.uk/projects/raspberrypi/tutorials/os/

Mailboxes?

Oh, it's an ARM thing: https://developer.arm.com/documentation/ddi0306/b/CHDGHAIG

It's an interrupt.

There's note on DMA, to use memory barrier before first write, and memory barrier after last read. The data may go in wrong order when switching the peripheral.

Fuck, I have no idea what to do now. I can gasp only a shallow informations on peripherals. Like it's too fucked up for me. How am I supposed to figure out, what to write to the black box on left to me to display stuff on second monitor? I need knowlege, a decent one.

## 02.10

How does one learn, how a complex system works? Assume we don't have any additional information, like documentation.

In my case I have only one copy of raspberry pi; and I don't want to break it. One way would be to monitor existing software.

However, learning system from black box perspective have limitaitons; you do not truely understand the system, hence you would use only fraction if it's power (unless it's testing has a large coverage)

Hardware reverse engineering!

I might take time and deep dive into this field. It might seem as an overkill for a mere handling HDMI display, yet I have a feeling that it will become handy later for this project. Assuming one have expert knowlege in electronic and reverse engineering system analysis, you could learn much beyond what's in documentation.

After all the docs explain more a higher-level stuff. The ABI/API, but that's not enought for the scope of this project.

random links I got to quickly read on hardware RE.

https://emsxchange.com/hardware-reverse-engineering/

https://satharus.me/tech/2023/11/30/hardware_reverse_engineering.html

https://www.youtube.com/watch?v=VdgA6JQetD8

https://www.eff.org/issues/coders/reverse-engineering-faq

So, reverse engineering SoC is fucked up. You need a microscope, and some good acids. The component-path analysis is more appealing for me; yet you'd need to learn about each component.

I guess that's the plan: to create a schematic of how HDMI connects to CPU and GPU.

## 03.10

I forgot that I bought that cheap monitor, so I'll not cry if I break it.

Let's do something actually fun!

Let's fucking send random bytes to HDMI!

First thing, I need to enable DMA engine.

`0x7E007FF0` bit 0 = 1, rest 0. (32 bits) -> `0x1`

Cool, I'll create a map of DMA registers (so I won't have to manually type in byte values and addreses). And because I'm lazy I will regex register definitions from docs into the code.

Also, there's a werid access type: `W1C`; it appears to mean "write 1 to clear". And another thing, we have mixed `RO`, `RW`, and `W1C` accesses for bits. tock_registers appear to not support such granuality. But I can use: [`Aliased`](https://docs.tockos.org/tock_registers/registers/struct.aliased) register type.

## 05.10

I am lazy, thinking about coping data of another registers from docs into rust... it's too much time.

I think it's a good oppurtunity for creating a webextension I had in mind for a while. [[HTML Extractor]]

# v2.0

tadOSv2 is an operating system focused on audio

## 22.05

before starting I want to learn how PipeWire, PulseAudio, JACK work.

As in previous case; to not bloat this log and focus only on my OSDev journey I created dedicated notes (starting from PipeWire).

- [[PipeWire]]

- [[Projects/OS Project/Raw Log|PipeWire Log]]